This post is a bit divergent from my usual write ups, but I know a few people off hand that are looking to upgrade their macs, and are a bit apprehensive to do so. Well – I’ve gone through the pain myself, so you don’t have to!

A bit ago, I got the opportunity to upgrade my work laptop to an M1 MacBook Pro. And yes, it has been painful. But also, it’s been worth it. I’ve gotten past most of the pain, but it took me a while to sit my lazy self down and figure out a workflow that works for me.

This isn’t a post to convince someone to upgrade, or to not upgrade. This post will not include how I setup my terminal or zshell or editor for regular development. This is me just sharing how I’ve setup my machine for development specifically for working on an M1 machine. I focus on Python development, but parts will help any type of development.

What’s Different About This Post #

There are many write ups on how folks setup their Mac for development. However, very few (if any) setup their computers to make use of the M1 processor – many just use the Rosetta emulator for everything.

I’ve created a split setup, which tries to take advantage of the tools and packages that have indeed been made available for M1 machines, and use an emulated x86 environment when not.

Disclaimers #

- I focus on setting up

zshwith Homebrew,pyenv,pyenv-virtualenv, andpipx. However, the approach used can be applied withbashorfish, for Ruby’srbenv, Java’sjenv, Node’snvm, anything that’s essentially path management. - I specifically avoid using

condaas I’ve foundcondaand virtual environments do not play nicely together. I’m surecondacan be helpful for some folks, especially if only working in science-, math-, and/or research-related projects. - I may be missing some steps; it was months ago that I got this computer. I apologize! But I trust y’all are smart to figure out what’s missing.

- My approach may not work for you and your workflow; or may not go far enough for you. Feel free to use the comment section to share what you’ve done differently.

- See Miscellany for tidbits on Docker, and Tensorflow.

- I’ll try to keep this updated as I discover new quirks.

Step 0: About This Mac #

To start, here are the relevant details of the machine I’m working with, as of the date of this post:

- MacBook Pro (14-inch, 2021)

- OS: Monterey, 12.4

- Chip: Apple M1 Max

Step 1: Rosetta 2 #

Rosetta 2 is an “emulator” or a translator for software built for Intel-based processors to run on Apple’s Silicon/M1 processors.

While many apps for macOS have transitioned to running on M1 machines, there are still a lot of non-user-facing (a.k.a developer-facing) software and tools that do not play nicely. For instance, for Python, there are many packages with C-extensions whose binaries are not yet built for the M1, causing a lot of headaches (I’m looking at you, grpcio, tensorflow, librosa). Then there’s Docker, which will run fine on Apple Silicon, but can cause frustration when trying to build & deploy to a non-M1 environment. Enter: Rosetta 2.

Setup #

In a terminal, run:

softwareupdate --install-rosetta --agree-to-license

Optional Step 2: iTerm2 for Rosetta and Native #

This step is entirely optional. However, if you choose to skip this step, you’ll want to do Step 3.3.

You may find it a lot easier to have two copies of iTerm.app (or Terminal.app), one that runs with Rosetta, and one that does not. Having both makes it easy to visually separate which environment you’re working in, as you can now customize the look and theme of each terminal app.

Setup #

-

Open Finder to

/Applications(or/Applications/Utilitiesif usingTerminal.app). -

Create a copy of

iTerm.app(orTerminal.app). Name the copyRosetta-iTerm.app(orRosetta-Terminal.app, or whatever that makes sense to you).

-

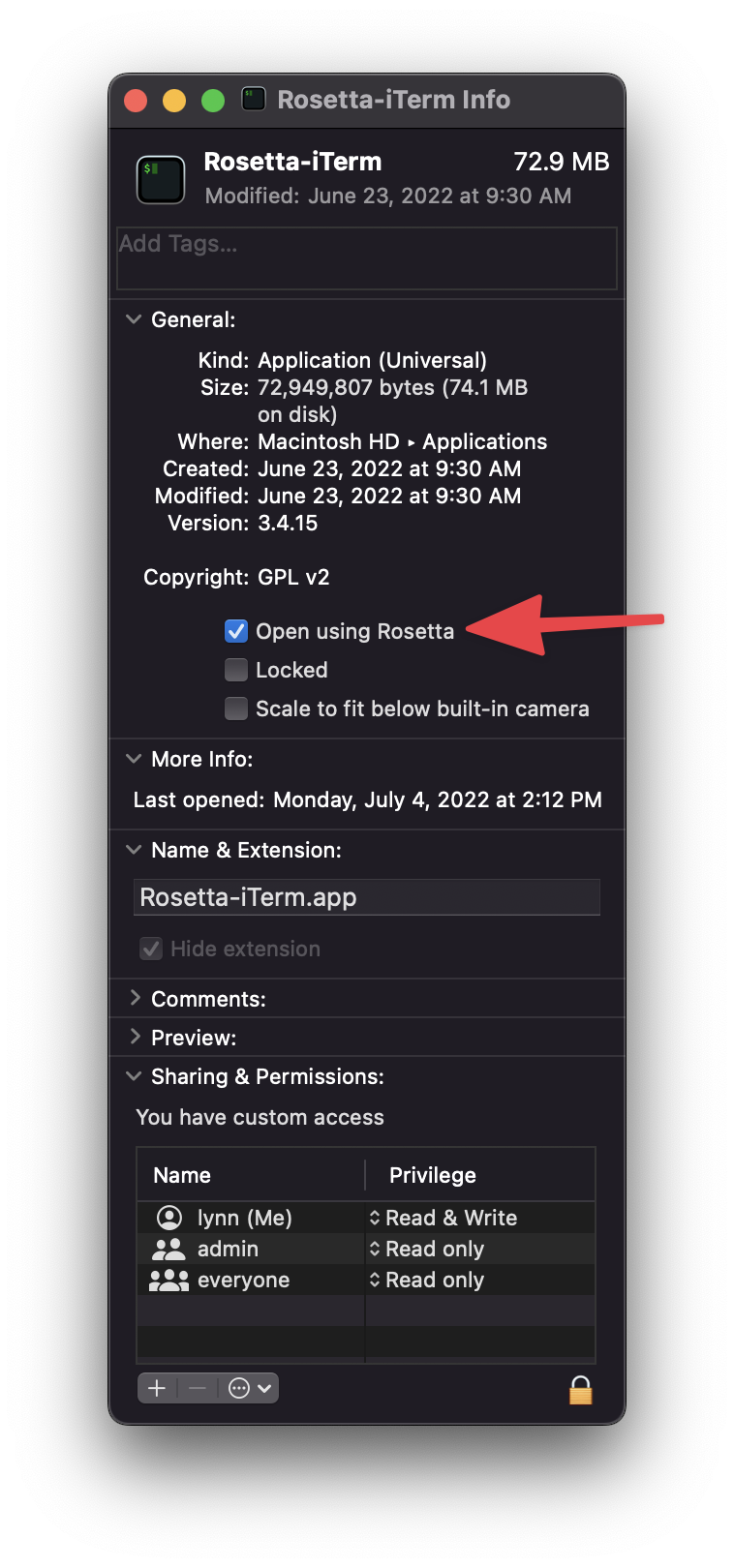

Right-click on the new Rosetta terminal copy, and click “Get Info”.

-

Check “Open using Rosetta” then close the “Get Info” window.

-

Open the Rosetta-version of your terminal app and confirm it’s using Rosetta:

$ arch i386 $ uname -m x86_64 -

Open the native version of your terminal app to see what the output of those commands look like otherwise:

$ arch arm64 $ uname -m arm64

Now we’ve created a copy of our terminal app that can be used for tools not yet available for the M1.

Additional Optional Steps #

-

For a helpful visual cue about which terminal you’re running, make an adjustment to your terminal’s general theme. I just made the background of mind a little lighter.

-

When

Rosetta-iTerm.appis open, the menu bar still says “iTerm2”. You can change this by opening up/Applications/Rosetta-iTerm.app/Contents/Info.plistand making the following edit (you’ll have to restart the app for it to pick up):<key>CFBundleName</key> - <string>iTerm2</string> + <string>Rosetta-iTerm2</string> <key>CFBundlePackageType</key> <string>APPL</string>

Step 3: Initial Shell Setup #

Depending on whether I’m running in an emulated environment or not, the development tools I install (i.e. homebrew) and setup (i.e. pyenv, pipx) will live in different paths.

I trust that those using bash or fish can figure out how to translate this step appropriately.

Setup #

-

Create two files:

~/.zshrc.x86_64and~/.zshrc.arm64. -

Open

~/.zshrcand add the following snippet:# Detect if running Rosetta or not to pull in specific config if [ "$(sysctl -n sysctl.proc_translated)" = "1" ]; then # rosetta (x86_64) source ~/.zshrc.x86_64 else # regular (arm64) source ~/.zshrc.arm64 fi -

Optionally, in the same `~/.zshrc` file, add the following snippet to allow you to switch between a Rosetta-based shell and native. This is quite handy, particularly if you skipped the [optional step 2](#optional-step-2-iterm2-for-rosetta-and-native).

alias rosetta='(){ arch -x86_64 $SHELL ; }' alias native='(){ arch -arm64e $SHELL ; }'

We’ll come back to add to the two new zshrc files we’ve created to configure homebrew, pyenv, and pipx.

See Appendix below for the full ~/.zshrc* files.

Optional Step #

When running in Rosetta, I’ve added a prefix to my prompt. In my ~/.zshrc.x86_64 file, I defined a new function:

function rosetta {

echo "%{$fg_bold[blue]%}(%{$FG[205]%}x86%{$fg_bold[blue]%})%{$reset_color%}"

}

Then, I added it to my prompt:

PROMPT='$(rosetta)$(virtualenv_info)$(collapse_pwd)$(prompt_char)$(git_prompt_info)'

That looks like:

Note: in the above PROMPT declaration, virtualenv_info, collapse_pwd, and prompt_char are custom functions; git_prompt_info comes from oh-my-zsh.

Step 4: Native Installation #

First, we’ll setup brew pyenv, pyenv-virtualenv, and pipx for our native environment. We’ll setup brew, pyenv, and pipx with their respective defaults, where brew installs into /opt/homebrew, pyenv with ~/.pyenv, and pipx with ~/.local.

In the next step, we will do the same within a Rosetta terminal/shell with different directories. It’s particularly helpful to have pyenv and pipx separated like this, so that each installation can’t interact with the other. That is, our Rosetta-installed pyenv-virtualenv or pipx can’t see or delete virtualenvs that were created with the native installed pyenv-virtualenv or pipx, and vice versa. I won’t accidentally activate a virtual environment in the native shell that can only work in Rosetta.

Setup #

In the native (not Rosetta) shell or terminal app:

-

Install Homebrew by running:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)" -

Add the following to the new

~/.zshrc.arm64file:# Brew setup for arm64 local brew_path="/opt/homebrew/bin" local brew_opt_path="/opt/homebrew/opt" export PATH="${brew_path}:${PATH}" eval "$(${brew_path}/brew shellenv)" -

Start a new native shell/terminal session in order to pick up what we’ve added to

~/.zshrc.arm64. -

Run the following command to install the required packages for

pyenvsetup:brew install openssl readline sqlite3 xz zlib tcl-tk -

Install pyenv:

brew install pyenv -

Add the following to

~/.zshrc.arm64:# setup for pyenv export PYENV_ROOT="$HOME/.pyenv" command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH" eval "$(pyenv init -)" -

Start a new native shell/terminal session again in order to pick up what we’ve added to

~/.zshrc.arm64. -

Install

pyenv-virtualenv:brew install pyenv-virtualenv -

Start a new native shell/terminal session again in order to pick up what we’ve added to

~/.zshrc.arm64. -

Install

pipx:brew install pipx -

Add the following to

~/.zshrc.arm64:# `pipx` setup export PATH="$PATH:/Users/lynn/.local/bin" export PIPX_BIN_DIR="$HOME/.local/bin" export PIPX_HOME="$HOME/.local/pipx"

Start a new native shell/terminal session again in order to pick up what we’ve added to ~/.zshrc.arm64.

Step 5: Rosetta Installation #

Now, we’re going to install and setup brew, pyenv, pyenv-virtualev, and pipx for Rosetta. brew will automatically install into /usr/local, while we’ll have to configure pyenv and pipx to look into different directories when setting up Python versions and virtualenvs.

Caution! The steps below look quite similar to the previous step. Pay attention to:

- The different paths for both

brew,pyenv, andpipx; and - The new directories for

pyenvandpipx.

Setup #

In the Rosetta-enabled (not native) terminal app or shell:

-

Install Homebrew by running:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)" -

Add the following to the new

~/.zshrc.x86_64file:# Brew paths for x86_64 local brew_path="/usr/local/bin" local brew_opt_path="/usr/local/opt" export PATH="${brew_path}:${PATH}" eval "$(${brew_path}/brew shellenv)" -

Start a new Rosetta shell/terminal session in order to pick up what we’ve added to

~/.zshrc.x86_64. -

Run the following command to install the required packages for

pyenvsetup:brew install openssl readline sqlite3 xz zlib tcl-tk -

Install pyenv:

brew install pyenv -

Create a new directory for

pyenvrunning in Rosetta:mkdir -p ~/.pyenv.x86_64 -

Add the following to

~/.zshrc.x86_64:# setup for pyenv export PYENV_ROOT="$HOME/.pyenv.x86_64" command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH" eval "$(pyenv init -)" -

Start a new Rosetta shell/terminal session in order to pick up what we’ve added to

~/.zshrc.x86_64. -

Install

pyenv-virtualenv:brew install pyenv-virtualenv -

Start a new Rosetta shell/terminal session in order to pick up what we’ve added to

~/.zshrc.x86_64. -

Create a new directory for

pyenvrunning in Rosetta:mkdir -p ~/.local/x86_64 -

Add the following to

~/.zshrc.x86_64:# `pipx` setup export PATH="$PATH:/Users/lynn/.local/x86_64/bin" export PIPX_BIN_DIR="$HOME/.local/x86_64/bin" export PIPX_HOME="$HOME/.local/x86_64/pipx"

Start a new Rosetta shell/terminal session in order to pick up what we’ve added to ~/.zshrc.x86_64.

Miscellany #

Docker #

The Docker for Mac app works on Apple Silicon just fine. You may need explicitly define what platform is needed (e.g. --platform=linux/arm64 or --platform=linux/amd64) when building or pulling.

Tensorflow on Docker #

Tensorflow does not have official binaries for M1 machines (a.k.a. one can’t simply pip install tensorflow), but has released a separate package, tensorflow-macos. But there is not a pre-built native solution for running Tensorflow in Docker on a native M1 environment; it must be in Rosetta (--platform=linux/amd64), or you must build Tensorflow from source.

As an anecdote, I’ve found that running a Tensorflow-based model in Docker within an emulated environment is significantly slower than running in a native environment. Very roughly, it’s about 10x slower to get a prediction in an emulated environment than native. Therefore, it may be worth it to build Tensorflow from source as a base image, then copy the built binaries into the images you’re developing on.

Warning: Building Tensorflow from source can take a couple of hours.

See the Dockerfiles in the Appendix.

Appendix #

zshrc files

#

Caution: Be sure to follow the installation steps in Step 4 and Step 5 above in order for these ~/.zshrc* files to work.

~/.zshrc

#

This snips out bits of my own custom setup:

# <-- snip -->

# Rosetta or native-related zsh config

if [ "$(sysctl -n sysctl.proc_translated)" = "1" ]; then

# rosetta (x86_64)

source ~/.zshrc.x86_64

else

# regular (arm64)

source ~/.zshrc.arm64

fi

# <-- snip -->

~/.zshrc.x86_64

#

# `brew` setup

local brew_path="/usr/local/bin"

local brew_opt_path="/usr/local/opt"

export PATH="${brew_path}:${PATH}"

eval "$(${brew_path}/brew shellenv)"

# `pyenv` setup

export PYENV_ROOT="$HOME/.pyenv86"

command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH"

eval "$(pyenv init -)"

# `pipx` setup

export PATH="$PATH:/Users/lynn/.local/x86_64/bin"

export PIPX_BIN_DIR="$HOME/.local/x86_64/bin"

export PIPX_HOME="$HOME/.local/x86_64/pipx"

# pretty stuff

function rosetta {

echo "%{$fg_bold[blue]%}(%{$FG[205]%}x86%{$fg_bold[blue]%})%{$reset_color%}"

}

PROMPT='$(rosetta)$(virtualenv_info) $(collapse_pwd)$(prompt_char)$(git_prompt_info)'

~/.zshrc.arm64

#

# `brew` setup

local brew_path="/opt/homebrew/bin"

local brew_opt_path="/opt/homebrew/opt"

export PATH="${brew_path}:${PATH}"

eval "$(${brew_path}/brew shellenv)"

# `pyenv` setup

export PYENV_ROOT="$HOME/.pyenv"

command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH"

eval "$(pyenv init -)"

# `pipx` setup

export PATH="$PATH:/Users/lynn/.local/bin"

export PIPX_BIN_DIR="$HOME/.local/bin"

export PIPX_HOME="$HOME/.local/pipx"

# pretty stuff

PROMPT='$(virtualenv_info)$(collapse_pwd)$(prompt_char)$(git_prompt_info)'

Dockerfile for Tensorflow

#

This is one single Dockerfile, but it’d probably be good to separate into two.

# This builds tensorflow v2.7.0 from source to be able to run on M1 within Docker

# as they do not provide binaries for arm64 platforms.

# https://github.com/tensorflow/tensorflow/issues/52845

#

# Adapted from https://www.tensorflow.org/install/source

#

# Warning!! this can take 1 - 2 hours to build.

#

# If a different version of tf is needed, you'll need to figure out the min

# bazel version (see doc link above). You may also need to figure out the

# build dependency version limitations - I learned `numpy<1.18` and `numba<0.55`

# the hard way :-!.

#

# --platform isn't needed particularly if building on M1, but it's more

# for info purposes, and as a safe guard if a non-M1 arch tries to build this

# Dockerfile

FROM --platform=linux/arm64 python:3.8-buster AS tf_build

WORKDIR /usr/src/

# minimum bazel version for tf 2.7.0 to build

ENV USE_BAZEL_VERSION=3.7.2

RUN apt-get update \

&& apt-get install -y \

# deps for building tf

python3-dev curl gnupg \

&& rm -rf /var/lib/apt/lists/*

RUN pip install -U pip setuptools

# tensorflow build dependencies

RUN pip install -U wheel && \

# limit numpy https://github.com/tensorflow/tensorflow/issues/40688

# & numba to work with numpy

pip install "numpy<1.18" "numba<0.55" && \

pip install -U keras_preprocessing --no-deps

# install bazel via bazelisk

RUN curl -sL https://deb.nodesource.com/setup_11.x | bash -

RUN apt-get -y install nodejs && \

npm install -g @bazel/bazelisk

# clone tf

RUN git clone https://github.com/tensorflow/tensorflow.git /usr/src/tensorflow

WORKDIR /usr/src/tensorflow

# trying to build tf 2.7.0

RUN git checkout v2.7.0

# and let's see if we can build

RUN ./configure

# build pip package - this took me 1 - 1.5 hrs!

RUN bazel build \

--incompatible_restrict_string_escapes=false \

--config=noaws \

# optionally limit ram if needed (default to host ram)

# --local_ram_resources=3200 \

# optionally limit cpus if needed (default to host number)

# --local_cpu_resources=8 \

//tensorflow/tools/pip_package:build_pip_package

# create a wheel in /tmp/tensorflow_pkg

RUN ./bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

# the tf io dep is not available on PyPI for arm64, so must build this from source too

RUN git clone https://github.com/tensorflow/io.git /usr/src/io

WORKDIR /usr/src/io

RUN python setup.py bdist_wheel \

--project tensorflow_io_gcs_filesystem \

--dist-dir /tmp/tensorflow_io_pkg

#####

# Probably where you want to create a second Dockerfile

#####

FROM --platform=linux/arm64 python:3.8-buster

# copy tf and deps

COPY --from=tf_build /tmp/tensorflow_io_pkg/*.whl /usr/src/io/

COPY --from=tf_build /tmp/tensorflow_pkg/*.whl /usr/src/tf/

# (re)install tf build deps first before tf

RUN pip install "numpy<1.18" "numba<0.55" && \

pip install -U keras_preprocessing --no-deps

# install tf & dependency

RUN pip install /usr/src/io/*.whl && \

pip install /usr/src/tf/*.whl